Computation

Contents

Flynn's Taxonomy

This is an important classification of computer architectures proposed in the 1960s. It is important to match the appropriate computation model to the problem being solved. The two main classifications are shown below, although many languages allow the programmer to mix these classifications and Mesham is no different.

Single Program Multiple Data

In SPMD, each process executes the same program with its own data. The benefit of SPMD is that only one set of code need be written for all processors, although this can be bloated and lacks support for optimising specific parts for specific architectures.

Multiple Program Multiple Data

In MPMD each process executes its own program and its own data. The benefit of MPMD is that it is possible to tailor the code to run efficiently on each processor and also keeps the code each processor will execute relatively small, however writing code for each processor in a large system is not practical.

The Design of Parallelism

In designing how your parallel program will exploit the advantages of parallelism there are two main ways in which the parallel aspects can be designed. The actual problem type depends on which form of parallelism is to be employed.

Data Parallelism

In data parallelism each processor will execute the same instructions, but work on different data sets. For instance, with matrix multiplication, one processor may work on one section of the matrices whilst other processors work on other sections, solving the problem parallelly. As a generalisation data parallelism, which often requires an intimate knowledge of the data and explicit parallel programming, usually results in better results.

Task Parallelism

In task parallelism the program is divided up into tasks, each of which is sent to a unique processor to solve at the same time. Commonly, task parallelism can be thought of when processors execute distinct threads, or processes, and at the time of writing it is the popular way in which operating systems will take advantage of multicore processors. Task parallelism is often easier to perform but less effective than data parallelism.

Problem Classification

When considering both the advantages of and how to parallelise a problem, it is important to appreciate how the problem should be decomposed across multiple processors. There are two extremes of problem classification -embarrassingly parallel problems and tightly coupled problems.

Embarrassingly Parallel

Embarrassingly parallel problems are those which require very little or no work to separate them into a parallel form and often there will exist no dependenciess or communication between the processors. There are numerous examples of embarrassingly parallel problems, many of which exist in the graphics world which is the reason why the employment of many core GPUs has become a popular performance boosting choice.

Tightly Coupled Problems

The other extreme is that of tightly coupled problems, where it is very difficult to parallelise the problem and, if achieved, will result in many dependencies between processors. In reality most problems sit somewhere between these two extremes.

Law of Diminishing Returns

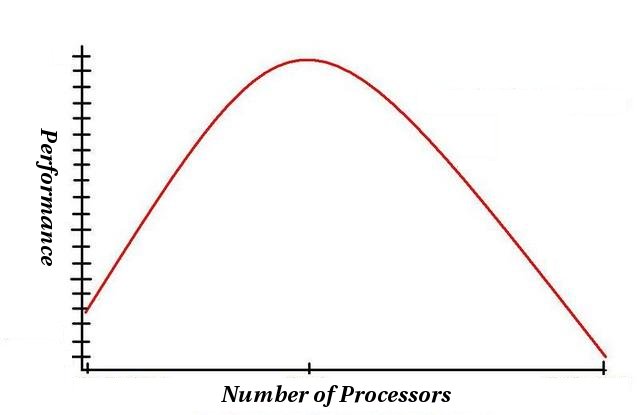

There is a common misconception that "throwing" processors at a problem will automatically increase performance regardless of the number of processors or the problem type. This is simply not true because compared with computation, communication is a very expensive operation. There is an optimum number of processors, after which the cost of communication outweighs the saving in computation made by adding an extra processor and the performance drops. The figure below illustrates a performance vs processors graph for a typical problem. As the number of processors are increased, firstly performance improves, however, after reaching an optimum point performance will then drop off. It is not uncommon in practice for the performance on far too many processors to be very much worse than it was on one single processor!

In theory a truly embarrassingly parallel problem (with no communication between processors) will not be subject to this rule, and it will be more and more apparent as the problem type approaches that of a tightly coupled problem. The problem type, although a major consideration, is not the only factor at play in shaping the performance curve - other issues include the types of processors, connection latency and workload of the parallel cluster will cause variations to this common bell curve.